Advancing Colour Fidelity in Virtual Production

Research Team

Spencer Idenouye

Kevin Santos

James Rowan

Partners

Dark Slope Studios

NRC IRAP

CTO

Impact

- Cluster rendering optimization for V.P. in UE5.

- Latency reduction in in LED volume systems

- Color pipeline and calibration workflow for V.P

Developing a Managed Colour Workflow and Engine Distribution System for In-Camera VFX

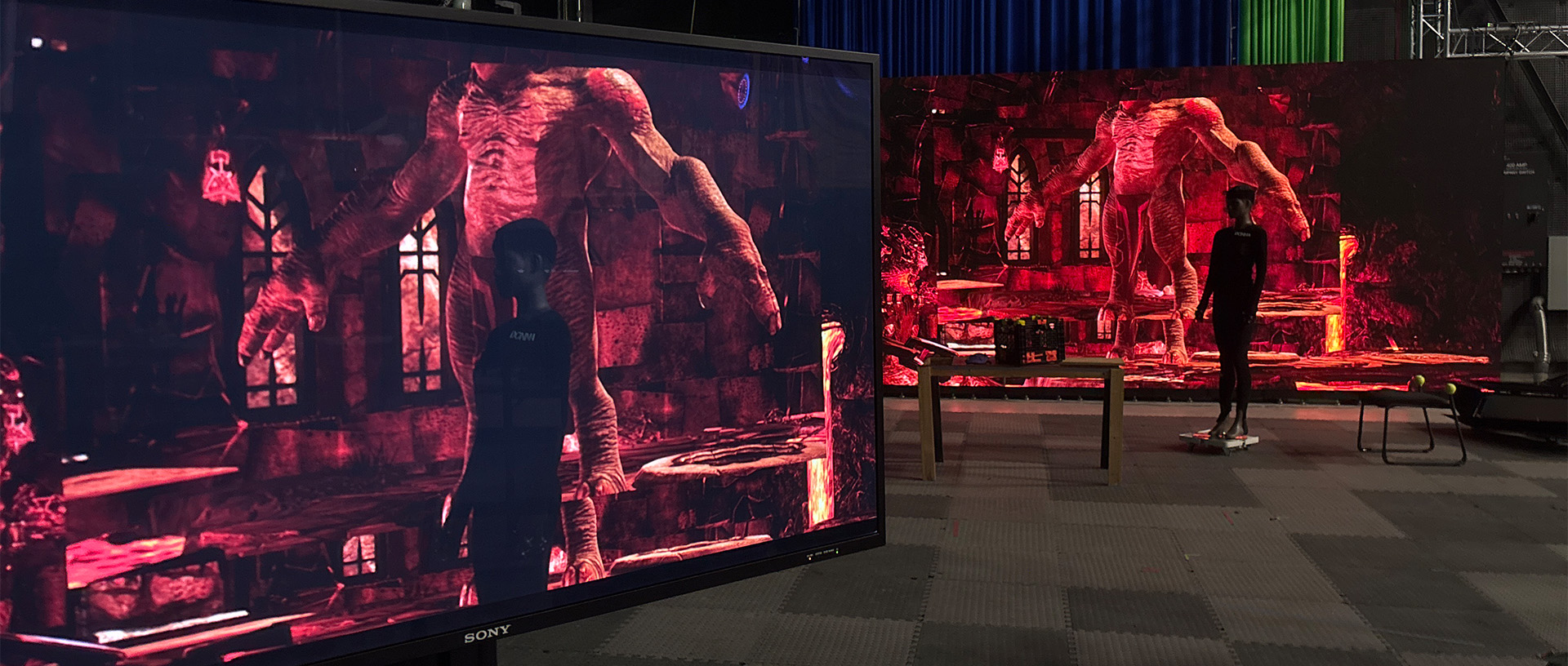

This research project focused on advancing virtual production (V.P) workflows, specifically addressing the critical need for consistent colour representation between LED wall displays and camera capture.

Recognizing significant discrepancies like colour shifts and desaturation, the team developed a comprehensive, three-step managed colour workflow. This involved establishing a consistent baseline colour space across all hardware and software, implementing a custom camera-specific calibration using OpenVPCal to generate OCIO profiles, and utilizing Unreal Engine’s tools for technical colour correction. Beyond colour, the project also improved cluster rendering configurations and significantly reduced LED volume latency, enhancing real-time interactivity and synchronization.

The outcomes include greater visual consistency, seamless integration of virtual and physical elements, and a repeatable, production-ready calibration workflow suitable for high-end in-camera VFX projects.

The Challenge of Visual Consistency in Virtual Production

In virtual production environments, a significant challenge arises when the image displayed on an LED wall differs noticeably from what the camera captures. This was observed as a clear differential, where reds shifted towards pink, and there was desaturation and diminished contrast in the captured image compared to the LED wall display. To ensure visual consistency and fidelity, especially when shooting with cameras like the RED Komodo X in a Rec. 709 environment and displaying on advanced monitors, a colour managed workflow is essential.

The objective of this research was to establish such a workflow within the Darkslope ICVFX (In-Camera Visual Effects) environment using Unreal Engine 5.5, aiming to make images “look right”. This project recognized the need to address these colour discrepancies and workflow complexities, especially considering that desktop monitors used by operators might not be calibrated, leading to further visual mismatches with the LED wall. For larger projects or those requiring high precision, a dedicated and robust colour management solution becomes imperative, even if it demands significant time and resource.

Engineering a Colour-Calibrated Workflow with OCIO and OpenVPCal

Our research established a comprehensive managed colour workflow by focusing on three key steps: baseline configuration, custom calibration, and in-engine colour correction. Beyond colour, the project also enhanced overall virtual production efficiency through advancements in cluster rendering and latency optimization.

- Baseline Configuration

The colour pipeline was standardized by aligning Unreal Engine, Brompton Tessera processors, and LED panel settings to a consistent colour space (e.g., Rec. 709). Key adjustments included disabling HDR and auto-colour settings in Windows and NVidia Control Panel to prevent unwanted shifts. - Custom Calibration

For high-precision needs, we used Netflix’s OpenVPCal to create OCIO profiles tailored to specific cameras and LED displays. This involved capturing test patterns, processing image sequences in DaVinci Resolve, and generating .ocio files for Unreal integration. - Unreal Colour Correction

Using Unreal’s colour grading tools for on-set technical corrections, to ensure alignment between virtual elements and real-world lighting—distinct from creative grading done in post.

Additional Outcomes:

Cluster Rendering Setup – enabled synchronized multi-display rendering via local source control.

Latency Optimization – used Unreal Insights to detect and reduce LED wall latency, improving real-time responsiveness.

A Reproducible, Scalable Pipeline for ICVFX Productions

The resulting workflow provides a scalable framework for managing colour in real-time virtual production environments. Key outcomes include:

- A camera-specific OCIO configuration system for accurate LED colour reproduction.

- Real-time tuning using Unreal’s nDisplay Colour Grading Panel to align LED content with physical sets.

- Source-controlled Unreal Engine distribution for consistent engine/plugin states across teams.

This technical infrastructure supports both visual accuracy and production scalability, offering measurable improvements in latency, synchronization, and visual blending between virtual and physical assets. The system is well-suited for high-end productions requiring precise visual fidelity and is adaptable across various LED panel specifications and camera systems.