Sign Language Automated Pipeline with Unreal Engine 5

Research Team

Stephan Kozak

Valentina Bachkarova

Mohammad Moussa

Partners

Deaf AI Inc.

NRC IRAP

CTO

Impact

- Real time ASL pipeline using AI.

- Gesture linking through commands in Unreal Engine.

- Workflow established for future development.

Creating a Motion-Captured AI Character Pipeline for Sign Language Communication

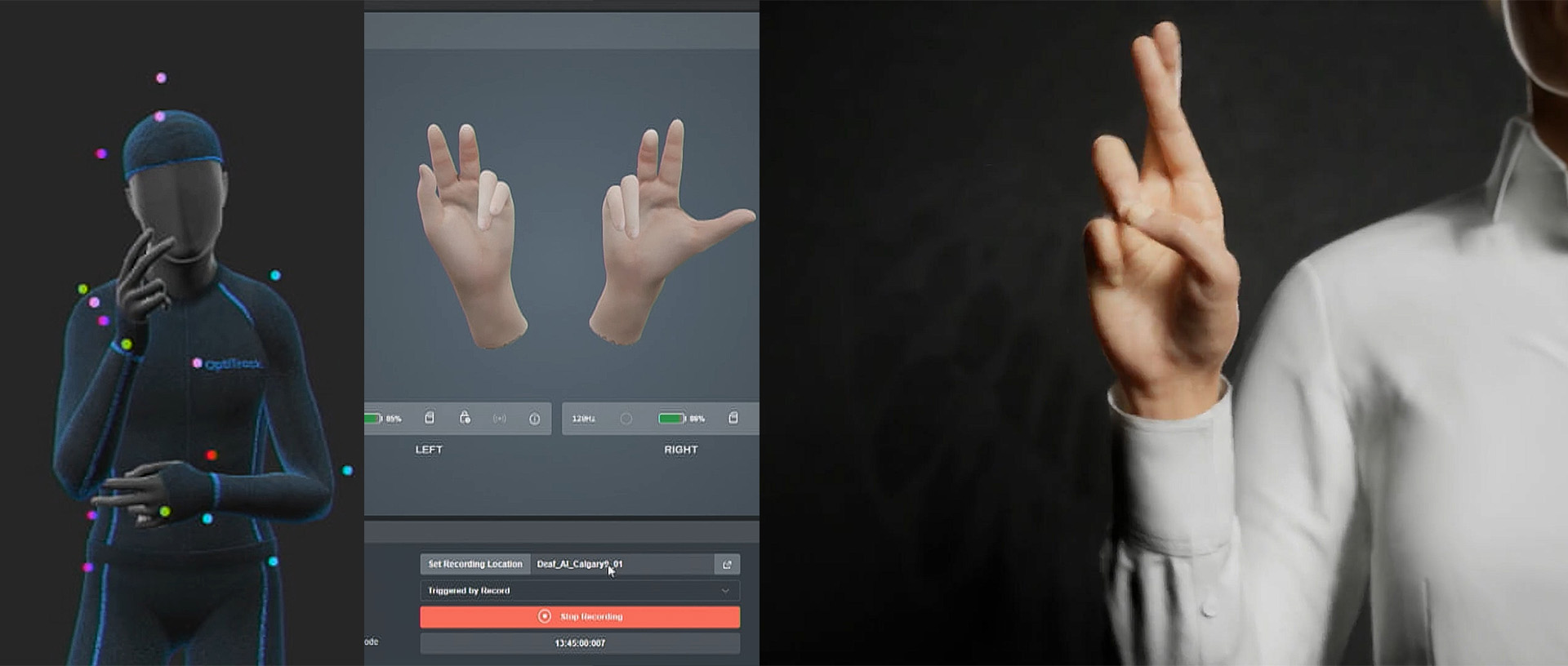

The Realtime AI Character Translation Prototype was a research collaboration between Deaf AI Inc. and Sheridan’s SIRT Centre aimed at developing a real-time animation pipeline for translating spoken or written commands into American Sign Language (ASL) gestures. Using Unreal Engine 5 and the Metahuman framework, the team developed two working prototypes: one simulating an airport baggage claim scenario and another linking AI-generated string commands to gesture animations. Motion capture was used to record a foundational ASL content library, which was then integrated into Unreal via Blueprints and runtime logic. The project delivered a documented pipeline for animation, asset management, and rendering in real-time, forming the basis of a future NSERC Applied Research and Development grant. These early results show strong promise for the future of accessible AI tools that communicate using sign language, enabling broader inclusion across digital interfaces.

Bridging Communication Through Innovation

Deaf AI Inc., in collaboration with Sheridan’s SIRT Centre, sought to explore how real-time animation and artificial intelligence could be leveraged to improve digital accessibility for the Deaf community. Traditional text or voice-based interfaces often fall short in serving sign language users. This project aimed to define and prototype a system in Unreal Engine 5 that could animate an AI-driven character using motion capture data to communicate through American Sign Language (ASL). The long-term goal is to build inclusive and expressive AI agents capable of translating spoken or written language into sign language in real time.

Prototyping with Metahuman, Motion Capture, and Machine Learning

The methodology centered on creating an animated Pipeline Framework using modern real-time methods, specifically leveraging Metahuman and Unreal Engine 5 (UE5). The development and runtime environments were entirely based on UE5, which supported crucial aspects such as asset management, collaborative working, character creation, animation, and rendering.

The workflow included motion capture, the integration of machine learning libraries, AI implementation, Unreal Engine blueprints, and character design. Key project milestones facilitated this process, focusing on:

- Establishing robust source control and documentation

- Developing an animation – ASL content library

- Integrating with the latest Unreal Engine 5.2

- Completing rendering and documentation

This comprehensive approach ensured that the project not only built a functional prototype but also established a clear workflow for future advancements.

Foundations for Immersive Performance’s Future Breakthroughs

The collaboration produced two Unreal Engine-based prototypes that demonstrate real-time gesture-driven character interaction. These systems integrate Metahuman avatars, motion capture performance, and AI-driven scripting. The ASL animation library and blueprint-based control system provide a scalable base for future development. While still at the prototype stage, the project has laid essential groundwork for AI translation tools that prioritize accessibility. It supports Deaf AI’s broader vision for equitable communication platforms and provides a foundation for more complex gesture-based interaction in public-facing or assistive technologies.