Real-Time Compositing Workflow for LED Volume Set Extensions

Research Team

Kevin Santos

Spencer Idenouye

James Rowan

Emerson Chan

Partners

StradaXR

NRC IRAP

CTO

Impact

- Unreal Engine 5 Virtual Production set extension

- Live compositing

- Camera calibration

- Color accuracy evaluations

Developing Advanced Workflows for Live Object Compositing and Virtual Set Extensions in LED Volumes

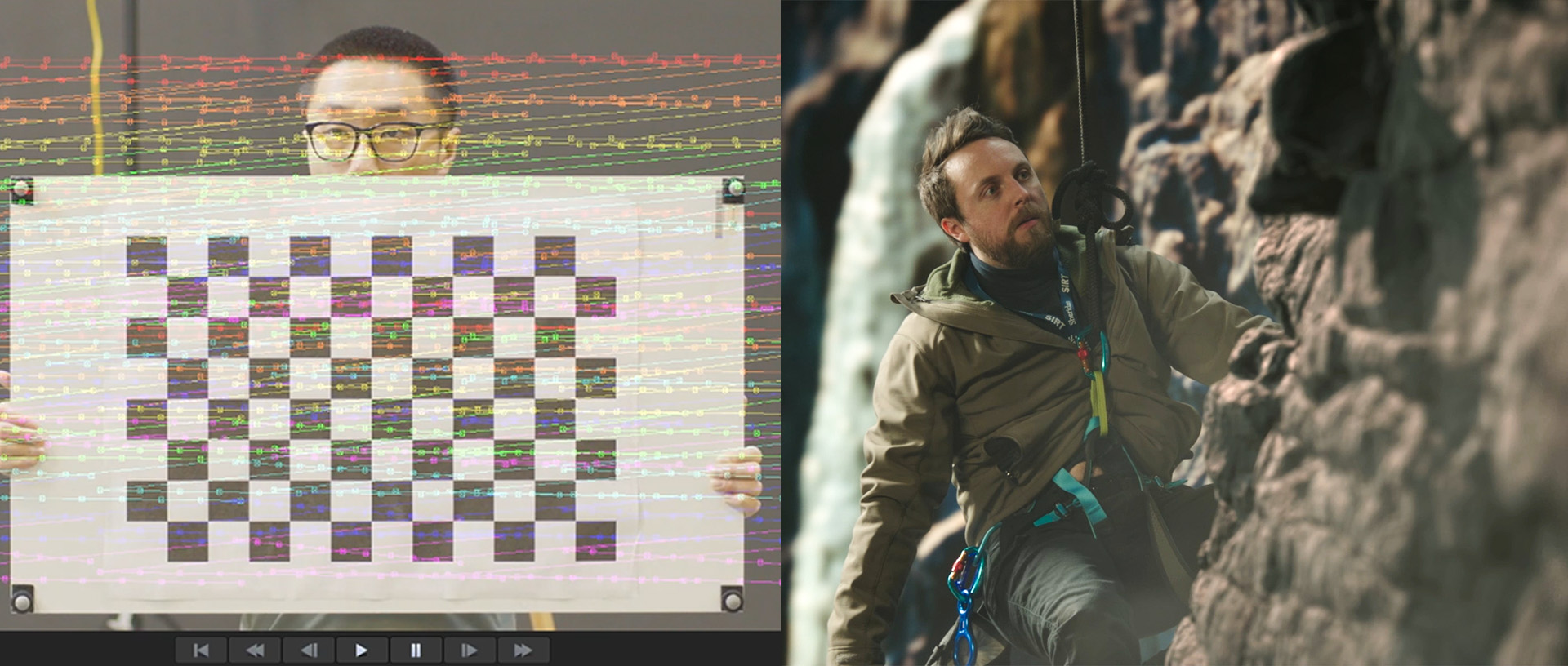

StradaXR wanted to explore live compositing techniques inside Unreal Engine 5 to allow for LED wall extensions during virtual productions. The goal was to establish a robust pipeline using Unreal Engine 5’s nDisplay and Composure plugins, integrated with OptiTrack for accurate camera and object tracking, to achieve realistic set extensions. The methodology involved developing the integrated workflow, rigorous testing to ensure real-time performance and visual fidelity, and comprehensive documentation and training. Key challenges addressed included optimizing camera tracking accuracy, minimizing system latency, and managing color consistency across the pipeline.

The Need for Advanced Real-Time Compositing in Virtual Production

The film and virtual production industries are constantly seeking ways to enhance visual effects workflows, particularly within immersive LED volume environments. Live object compositing, often referred to as Real-time Compositing (RTC), is a key area for development, enabling the seamless blending of physical objects and actors with virtual environments and set extensions. This capability is crucial for creating realistic augmented reality (AR) experiences directly in camera during live shoots.

This project aimed to push the boundaries of real-time virtual production techniques by establishing a robust workflow and pipeline for live object compositing. The primary goal was to integrate essential tools like Unreal Engine’s nDisplay and Composure plugins with the OptiTrack motion capture system to achieve realistic set extension in live shot conditions. A secondary, but vital, goal was to ensure real-time performance and high-quality visual fidelity, ensuring that live objects blend seamlessly with virtual environments displayed on the LED volume.

Project Outcomes, Deliverables, and Our Findings

This project successfully established a workflow and pipeline integrating nDisplay, Composure, and OptiTrack for real-time object compositing, contributing valuable insights to virtual production techniques and best practices regarding passive versus active markers.

The SIRT team implemented and showcased the project results at the StradaXR studio, delivering in-depth documentation to enable StradaXR to continue utilizing the developed workflow beyond the project duration.